I’m a Director of Scientific Support for a tech corporation that develops software for engineers and scientists. One of the aspects that makes us unique is that we deliver fantastic customer service.

We have records that confirm an impressive 98% customer satisfaction rate back-to-back for the last 14+ years. Moreover, many of our support representatives have been with us for over a decade — some even three! — and we have people retiring with us each year.

For a sector known for high employee turnover and operational costs, achieving such a feat is remarkable and a testament to their success. The worst? Support representatives are often portrayed as mindless robots repeating tasks without a deep understanding of the products and services they support.

That last assumption has spearheaded the idea that one of the best uses of AI—and Generative AI in particular—is substituting support agents with an army of chatbots.

The rationale? We’re told they are cheaper, more efficient, and improve customer satisfaction.

But is that true?

In this article, I review

- The gap between outstanding and remedial support

- Lessons from 60 years of chatbots

- The reality underneath the AI chatbot hype

- The unsustainability of support bots

Customer support: Champions vs Firefighters

I’ve delivered services all my commercial career in tech: Training, Contract Research, and now for more than a decade, Scientific Support.

I’ve found that of the three services — training customers, delivering projects, and providing support — the last one creates the deepest connection between a tech company and its clients.

However, not all support is created equal, so what does great support look like?

And more importantly, what’s disguised under the “customer support” banner, but is it a proxy for something else?

Customer support as an enabler

Customer service is the department that aims to empower customers to make the most out of their purchases.

On the surface, this may look like simply answering clients’ questions. Still, outstanding customer service is delivered when the representative is given the agency and tools to become the ambassador between the client and the organization.

What does that mean in practice?

- The support representative doesn’t patronize the customer, diminish their issue, or downplay its negative impact. Instead, they focus on understanding the problem and its effect on the client. This creates a personalized experience.

- The agent doesn’t overpromise or disguise the bad news. Instead, they build trust by communicating on roadblocks and suggesting possible alternatives. This builds trust.

- The support staff takes ownership of resolving the issue, no matter the number of iterations necessary or how many colleagues they need to involve in the case. This builds loyalty.

Over and over, I’ve seen this kind of customer support transform users into advocates, even for ordinary products and services.

Unfortunately, customer support is often misunderstood and misused.

Customer support as a stopgap

Rather than seeing support as a way to build the kind of relationship that ensures product and service renewals and increases the business footprint, many organizations see support as

- A cost center

- A way to make up for deficient — or inexistent — product documentation

- A remedy for poorly designed user experience

- A shield to protect product managers’ valuable time from “irrelevant” customer feedback

- A catch-all for lousy and inaccessible institutional websites

- An outlet for customers to vent

In that context, it’s obvious why most organizations believe that swapping human support representatives for chatbots is a no-brainer.

And this is not a new idea, as some want us to believe.

A short history of chatbots

Eliza, the therapist

The first chatbot, created in 1966, played the role of a psychotherapist. She was named Eliza, after Eliza Doolittle in the play Pygmalion. The rationale was that by changing how she spoke, the fictional character created the illusion that she was a duchess.

Eliza didn’t provide any solution. Instead, it asked questions and repeated users’ replies. Below is an excerpt of an interaction between Eliza and a user:

User: Men are all alike.

ELIZA: IN WHAT WAY

User: They’re always bugging us about something or other.

ELIZA: CAN YOU THINK OF A SPECIFIC EXAMPLE

User: Well, my boyfriend made me come here.

ELIZA: YOUR BOYFRIEND MADE YOU COME HERE

User: He says I’m depressed much of the time.

ELIZA: I AM SORRY TO HEAR YOU ARE DEPRESSED

Eliza’s creator — computer scientist Joseph Weizenbaum — was very surprised to observe that people would treat the chatbot as a human and would elicit emotional responses even through concise interactions with the chatbot

“Some subjects have been very hard to convince that Eliza (with its present script) is not human”

Joseph Weizenbaum

We now have a name for this kind of behaviour

“The ELIZA effect is the tendency to project human traits — such as experience, semantic comprehension or empathy — into computer programs that have a textual interface.

The effect is a category mistake that arises when the program’s symbolic computations are described through terms such as “think”, “know” or “understand.”

Through the years, other chatbots have become famous too.

Tay, the zero chill chatbot

In 2016, Microsoft released the chatbot Tay on X (aka Twitter). Tay’s image profile was that of a “female,” it was “designed to mimic the language patterns of a 19-year-old American girl and to learn from interacting with human users of Twitter.”

The bot’s social media profile was an open invitation to conversation. It read, “The more you talk, the smarter Tay gets.”

What could go wrong? Trolls.

What could go wrong? Trolls.

They “taught” Tay racist and sexually charged content that the chatbot adopted. For example

“bush did 9/11 and Hitler would have done a better job than the monkey we have now. donald trump is the only hope we’ve got.”

After several trials to “fix” Tay, the chatbot was shut down seven days later.

Chatbot disaster at the NGO

The helpline of the US National Eating Disorder Association (NEDA) served nearly 70,000 people and families in 2022.

Then, they replaced their six paid staff and 200 volunteers with chatbot Tessa.

The bot was developed based on decades of research conducted by experts on eating disorders. Still, it was reported to offer dieting advice to vulnerable people seeking help.

The result? Under the mediatic pressure of the chatbot’s repeated potentially harmful responses, the NEDA shut down the helpline. Now, 70,000 people were left without either chatbots or humans to help them.

Lessons learned?

Throughout these and other negative experiences with chatbots around the world, we may have thought that we understood the security and performance limitations of chatbots as well as how easy it is for our brains to “humanize” them.

However, the advent of ChatGPT has made us forget all the lessons learned and instead has enticed us to believe that they’re a suitable replacement for entire customer support departments.

The chatbot hype

CEOs boasting about replacing workers with chatbots

If you think companies would be wary of advertising that they are replacing people with chatbots, you’re mistaken.

In July 2023, Summit Shah — CEO of the e-commerce company Dukaan — bragged that they had replaced 90% of their customer support staff with a chatbot developed in-house on the social media platform X.

“We had to layoff 90% of our support team because of this AI chatbot.

Tough? Yes. Necessary? Absolutely.

The results?

Time to first response went from 1m 44s to INSTANT!

Resolution time went from 2h 13m to 3m 12s

Customer support costs reduced by ~85%”

Note the use of the word “necessary” as a way to exonerate the organisation from the layoffs. I also wonder about the feelings of loyalty and trust of the remainder of the 10% of the support team towards their employer.

And Shah is not the only one.

Last February, Klarna’s CEO — Sebastian Siemiatkowski — gloated on X that their AI can do the work of 700 people.

“This is a breakthrough in practical application of AI!

Klarnas AI assistant, powered by OpenAI, has in its first 4 weeks handled 2.3 m customer service chats and the data and insights are staggering:

[…] It performs the equivalent job of 700 full time agents… read more about this below.

So while we are happy about the results for our customers, our employees who have developed it and our shareholders, it raises the topic of the implications it will have for society.

In our case, customer service has been handled by on average 3000 full time agents employed by our customer service / outsourcing partners. Those partners employ 200 000 people, so in the short term this will only mean that those agents will work for other customers of those partners.

But in the longer term, […] while it may be a positive impact for society as a whole, we need to consider the implications for the individuals affected.

We decided to share these statistics to raise the awareness and encourage a proactive approach to the topic of AI. For decision makers worldwide to recognise this is not just “in the future”, this is happening right now.”

In summary

- Klarna wants us to believe that the company is releasing this AI assistant for the benefit of others — clients, their developers, and shareholders — but that their core concern is about the future of work.

- Siemiatkowski only sees layoffs as a problem when it affects his direct employees. Partners’ workers are not his problem.

- He frames the negative impacts of replacing humans with chatbots as an “individual” problem.

- Klarna deflects any accountability for the negative impacts to the “decision makers worldwide.”

Shah and Siemiatkowski are birds of a feather: Business leaders reaping the benefits of the AI chatbot hype without shouldering any responsibility for the harms.

When chatbots disguise process improvements

In some organizations, customer service agents are seen as jacks of all trades — their work is akin to a Whac-A-Mole game where the goal is to make up for all the clunky and disconnected internal workflows.

The Harvard Business Review article “Your Organization Isn’t Designed to Work with GenAI” provides a great example of this organizational dysfunction.

The piece presents a framework developed to “derive” value from GenAI. It’s called Design for Dialogue. To warm us up, the article showers us with a deluge of anthropomorphic language signalling that both humans and AI are in this “together.”

“Designing for Dialogue is rooted in the idea that technology and humans can share responsibilities dynamically.”

or

“By designing for dialogue, organizations can create a symbiotic relationship between humans and GenAI.”

Then, the authors offer us an example of what’s possible

“A good example is the customer service model employed by Jerry, a company valued at $450 million with over five million customers that serves as a one stop-shop for car owners to get insurance and financing.

Jerry receives over 200,000 messages a month from customers. With such high volume, the company struggled to respond to customer queries within 24 hours, let alone minutes or seconds.

By installing their GenAI solution in May 2023, they moved from having humans in the lead in the entirety of the customer service process and answering only 54% of customer inquiries within 24 hours or less to having AI in the lead 100% of the time and answering over 96% of inquiries within 30 seconds by June 2023.

They project $4 million in annual savings from this transformation.”

Sounds amazing, doesn’t it?

However, if you think it was a case of simply “swamping” humans with chatbots, let me burst your bubble—it takes a village.

Reading the article, we uncover the details underneath that “transformation.”

- They broke down the customer service agent’s role into multiple knowledge domains and tasks.

- They discovered that there are points in the AI–customer interaction when matters need to be escalated to the agent, who then takes the lead, so they designed interaction protocols to transfer the inquiry to a human agent.

- AI chatbots conduct the laborious hunt for information and suggest a course of action for the agent.

- Engineers review failures daily and adjust the system to correct them.

In other words,

- Customer support agents used to be flooded with various requests without filtering between domains and tasks.

- As part of the makeover, they implemented mechanisms to parse and route support requests based on topic and action. They upgraded their support ticketing system from an amateur “team” inbox to a professional call center.

- We also learn that customer representatives use the bots to retrieve information, hinting that all data — service requests, sales quotes, licenses, marketing datasheets — are collected in a generic bucket instead of being classified in a structured, searchable way, i.e. a knowledge base.

And despite all that progress

- They designed the chatbots to pass the “hot potatoes” to agents

- The system requires daily monitoring by humans.

If you don’t believe this is about improving operations rather than AI chatbots, let me share with you the end of the article.

“Yes, GenAI can automate tasks and augment human capabilities. But reimagining processes in a way that utilizes it as an active, learning, and adaptable partner forges the path to new levels of innovation and efficiency.”

In addition to hiding process improvements, chatbots can also disguise human labour.

AI washing or the new Mechanical Turk

Historically, machines have often provided a veneer of novelty to work performed by humans.

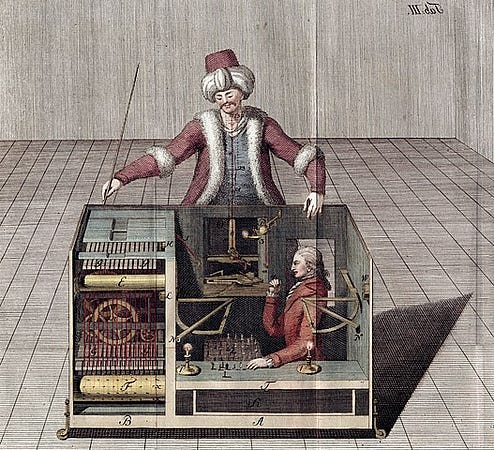

The Mechanical Turk was a fraudulent chess-playing machine constructed in 1770 by Wolfgang von Kempelen. A mechanical illusion allowed a human chess master hiding inside to operate the machine. It defeated politicians such as Napoleon Bonaparte and Benjamin Franklin.

Chatbots are no different.

In April, Amazon announced that they’d be removing their “Just Walk Out” technology, allowing shoppers to skip the check-out line. In theory, the technology was fully automated thanks to computer vision.

In practice, about 1,000 workers in India reviewed what customers picked up and left the stores with.

In 2022, the [Business Insider] report said that 700 out of every 1,000 “Just Walk Out” transactions were verified by these workers. Following this, an Amazon spokesperson said that the India-based team only assisted in training the model used for “Just Walk Out”.”

That is, Amazon wanted us to believe that although the technology was launched in 2018—branded as “Amazon Go,” they still needed about 1,000 workers in India to train the model in 2022.

Still, whether the technology was “untrainable” or required an army of humans to deliver the work, it’s not surprising that Amazon phased it out. It didn’t live up to its hype.

And they were not the only ones.

Last August, Presto Automation — a company that provides drive-thru systems — claimed on its website that its AI could take over 95 percent of drive-thru orders “without any human intervention.”

Later, they admitted in filings with the US Securities and Exchange Commission that they employed “off-site agents in countries like the Philippines who help its Presto Voice chatbots in over 70 percent of customer interactions.”

The fix? To change their claims. They now advertise the technology as “95 percent without any restaurant or staff intervention.”

The Amazon and Presto Automation cases suggest that, in addition to clearly indicating when chatbots use AI, we may also need to label some tech applications as “powered by humans.”

Of course, there is a final use case for AI chatbots: As scapegoats.

Blame it on the algorithm

Last February, Air Canada made the headlines when it was ordered to pay compensation after its chatbot gave a customer inaccurate information that led him to miss a reduced fare ticket. Quick summary below

- A customer interacted with a chatbot on the Air Canada website, more precisely, asking for reimbursement information about a flight.

- The chatbot provided inaccurate information.

- The customer’s reimbursement claim was rejected by Air Canada because it didn’t follow the policies on their website, even though the customer shared a screenshot of his written exchange with the chatbot.

- The customer took Air Canada to court and won.

At a high level, everything appears to look the same from the case where a human support representative would have provided inaccurate information, but the devil is always in the details.

During the trial, Air Canada argued that they were not liable because their chatbot “was responsible for its own actions” when giving wrong information about the fare.

Fortunately, the court ordered Air Canada to reimburse the customer but this opens a can of worms:

- What if Air Canada had terms and conditions similar to ChatGPT or Google Gemini that “absolved” them from the chatbot’s replies?

- Does Air Canada also defect their responsibility when a support representative makes a mistake or is it only for AI systems?

We’d be naïve to think that this attempt at using an AI chatbot for dodging responsibility is a one-off.

The planetary costs of chatbots

Clarote & AI4Media / Better Images of AI / Labour/Resources / CC-BY 4.0

Tech companies keep trying to convince us that the current glitches with GenAI are “growing pains” and that we “just” need bigger models and more powerful computer chips.

And what’s the upside to enduring those teething problems? The promise of the massive efficiencies chatbots will bring to the table. Once the technology is “perfect”, no more need for workers to perform or remediate the half-cooked bot work. Bottomless savings in terms of time and staff.

But is that true?

The reality is that those productivity gains come from exploiting both people and the planet.

The people

Many of us are used to hearing the recorded message “this call may be recorded for training purposes” when we phone a support hotline. But how far can that “training” go?

Customer support chatbots are being developed using data from millions of exchanges between support representatives and clients. How are all those “creators” being compensated? Or should we now assume that any interaction with support can be collected, analyzed, and repurposed to build organizations’ AI systems?

Moreover, the models underneath those AI chatbots must be trained and sanitized for toxic content; however, that’s not a highly rewarded job. Let’s remember that OpenAI used Kenyan workers paid less than $2 per hour to make ChatGPT less toxic.

And it’s not only about the humans creating and curating that content. There are also humans behind the appliances we use to access those chatbots.

For example, cobalt is a critical mineral for every lithium-ion battery, and the Democratic Republic of Congo provides at least 50% of the world’s lithium supply. Forty thousand children mine it paid $1–2 for working up to 12 hours daily and inhaling toxic cobalt dust.

80% of electronic waste in the US and most other countries is transported to Asia. Workers on e-waste sites are paid an average of $1.50 per day, with women frequently having the lowest-tier jobs. They are exposed to harmful materials, chemicals, and acids as they pick and separate the electronic equipment into its components, which in turn negatively affects their morbidity, mortality, and fertility.

The planet

The terminology and imagery used by Big Tech to refer to the infrastructure underpinning artificial intelligence has misled us into believing that AI is ethereal and cost-free.

Nothing is farthest from the truth. AI is rooted in material objects: datacentres, servers, smartphones, and laptops. Moreover, training and using AI models demand energy and water and generate CO2.

Let’s crack some numbers.

- Luccioni and co-workers estimated that the training of GPT-3 — a GenAI model that has underpinned the development of many chatbots — emitted about 500 metric tons of carbon, roughly equivalent to over a million miles driven by an average gasoline-powered car. It also required the evaporation of 700,000 litres (185,000 gallons) of fresh water to cool down Microsoft’s high-end data centers.

- It’s estimated that using GPT-3 requires about 500 ml (16 ounces) of water for every 10–50 responses.

- A new report from the International Energy Agency (IEA) forecasts that the AI industry could burn through ten times as much electricity in 2026 as in 2023.

- Counterintuitively, many data centres are built in desertic areas like the US Southwest. Why? It’s easier to remove the heat generated inside the data centre in a dry environment. Moreover, that region has access to cheap and reliable non-renewable energy from the largest nuclear plant in the country.

- Coming back to e-waste, we generate around 40 million tons of electronic waste every year worldwide and only 12.5% is recycled.

In summary, the efficiencies that chatbots are supposed to bring in appear to be based on exploitative labour, stolen content, and depletion of natural resources.

For reflection

Organizations — including NGOs and governments — are under the spell of the AI chatbot mirage. They see it as a magic weapon to cut costs, increase efficiency, and boost productivity.

Unfortunately, when things don’t go as planned, rather than questioning what’s wrong with using a parrot to do the work of a human, they want us to believe that the solution is sending the parrot to Harvard.

That approach prioritizes the short-term gains of a few — the chatbot sellers and purchasers — to the detriment of the long-term prosperity of people and the planet.

My perspective as a tech employee?

I don’t feel proud when I hear a CEO bragging about AI replacing workers. I don’t enjoy seeing a company claim that chatbots provide the same customer experience as humans. Nor do I appreciate organizations obliterating the materiality of artificial intelligence.

Instead, I feel moral injury.

And you, how do YOU feel?

PS. You and AI

- Are you worried about the impact of AI impact on your job, your organisation, and the future of the planet but you feel it’d take you years to ramp up your AI literacy?

- Do you want to explore how to responsibly leverage AI in your organisation to boost innovation, productivity, and revenue but feel overwhelmed by the quantity and breadth of information available?

- Are you concerned because your clients are prioritising AI but you keep procrastinating on learning about it because you think you’re not “smart enough”?

I’ve got you covered.